数据 在多个Redis节点之间自动分片

sentinel特点:

|

|

sentinel配置文件详解

参考:https://segmentfault.com/a/1190000002680804

主节点down了,从节点选举机制如下:

https://blog.csdn.net/tr1912/article/details/81265007

安装redis集群

我们首先添加一下helm库,并且搜索到redis

|

|

|

|

cat value.yaml

|

|

|

|

接着,我们来通过如下helm命令来创建redis集群,

|

|

创建成功之后,会有如下输出,

|

|

这样等待redis集群的创建了,我们也可以通过命令查看是否创建成功:

|

|

这样创建全部成功,接下来就是使用redis了。

验证 redis-ha

|

|

如果需要暴露给外部使用则需要再部署一个 NodePort Service

|

|

|

|

故障转移实验

停止主redis:

|

|

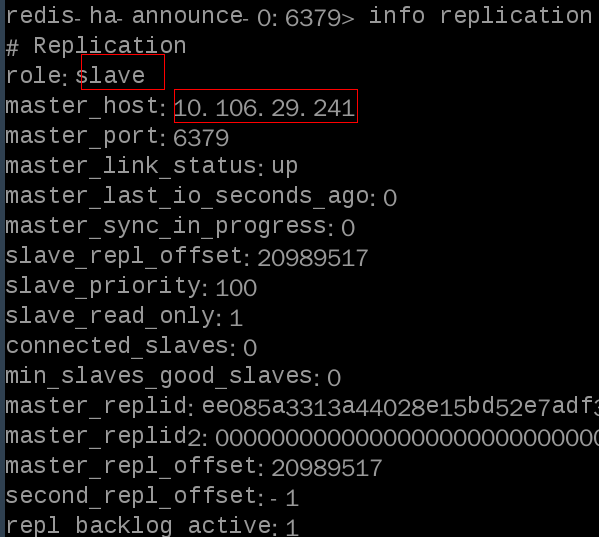

#主前任主上查看redis主从状态如下,10.106.29.241是第三台redis的ip,说转换成功了